LeNet

2024/2/19大约 2 分钟

LeNet

本节学习要点

- 了解 LeNet 网络结构

- 从搭建

LeNet网络扩展到一般的 CNN 模型搭建 - 学习如何设置 GPU 加速模型训练,并学习如何定义训练函数

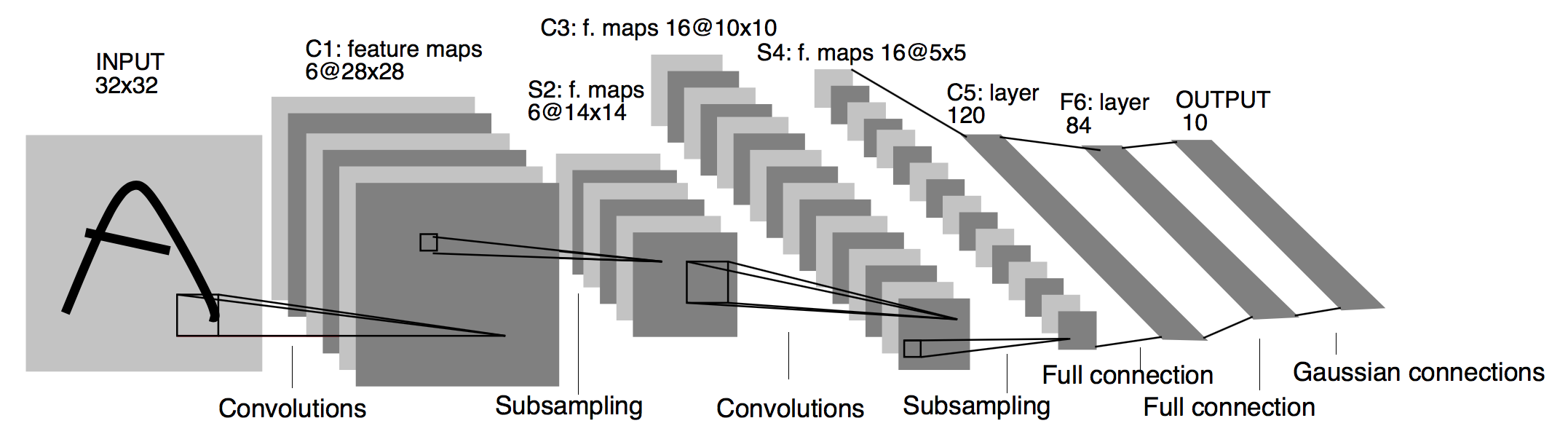

网络结构图

导入需要的包

import torch

import torch.nn as nn

import sys

sys.path(".")

import d2lzh_pytorch as d2l搭建模型

class LeNet(nn.Module):

def __init__(self, *args, **kwargs) -> None:

super().__init__(*args, **kwargs)

self.conv = nn.Sequential(

nn.Conv2d(1, 6, 5),

nn.Sigmoid(),

nn.MaxPool2d(2, 2),

nn.Conv2d(6, 16, 5),

nn.Sigmoid(),

nn.MaxPool2d(2, 2),

)

self.fc = nn.Sequential(

nn.Linear(16 * 4 * 4, 120), # 这边按道理是 16*5*5,但是 16*5*5 会报错,16*4*4 才能运行,我目前也不知道这个是为什么

nn.Sigmoid(),

nn.Linear(120, 84),

nn.Sigmoid(),

nn.Linear(84, 10),

)

def forward(self, x):

return self.fc(self.conv(x).view(x.shape[0], -1))训练模型

本次训练模型采用了GPU加速。

def test_accuracy(net, test_iter, device):

acc_sum, n = 0, 0

for X, y in test_iter:

X = X.to(device)

y = y.to(device)

output = net(X)

acc = (output.argmax(dim=1) == y).sum().cpu().item()

acc_sum += acc

n += y.shape[0]

return acc_sum / n

def train(net, train_iter, test_iter, batcj_size, optimizer, device, num_epochs):

net = net.to(device)

print("training on ", device)

loss = nn.CrossEntropyLoss()

for epoch in range(num_epochs):

train_l_sum, train_acc_sum, n, batch_count = 0, 0, 0, 0

start = time.time()

for X, y in train_iter:

X = X.to(device)

y = y.to(device)

output = net(X)

l = loss(output, y.to(device))

optimizer.zero_grad()

l.backward()

optimizer.step()

train_l_sum += l.cpu().item()

train_acc_sum += (output.argmax(dim=1) == y).sum().cpu().item()

n += y.shape[0]

batch_count += 1

test_acc = test_accuracy(net, test_iter, device)

print(

f"epoch: {epoch+1}, loss:{train_l_sum/batch_count},train acc:{train_acc_sum/batch_count},test acc:{test_acc},time:{time.time()-start}"

)main函数

定义损失函数和优化器,采用Adam优化器。

# read data

batch_size = 256

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size)

# build network

net = LeNet()

device = torch.device("cuda")

optimizer = torch.optim.Adam(net.parameters(), lr=0.001)

num_epochs = 5

train(net, train_iter, test_iter, batch_size, optimizer, device, num_epochs)测试结果展示

# 也可以存在 d2l 作为函数使用

X, y = next(iter(test_iter))

X, y = X.to(device), y.to(device)

true_label = d2l.get_fashion_mnist_labels(y.cpu().numpy())

pred_label = d2l.get_fashion_mnist_labels(net(X).argmax(dim=1).cpu().numpy())

titles = [true + "\n" + pred for true, pred in zip(true_label, pred_label)]

d2l.show_fashion_mnist(X[0:9].cpu(), titles[0:9]) # 显示前十个数据;输出

training on cuda

epoch: 1, loss:1.82,train acc:87.16,test acc:0.58,time:2.58

epoch: 2, loss:0.92,train acc:166.8,test acc:0.69,time:2.39

epoch: 3, loss:0.74,train acc:184.4,test acc:0.73,time:2.34

epoch: 4, loss:0.67,train acc:189.3,test acc:0.74,time:2.30

epoch: 5, loss:0.62,train acc:193.3,test acc:0.75,time:2.33