AlexNet

2024/2/20大约 2 分钟

AlexNet

本节学习要点

- 了解 AlexNet 网络结构

- 学习

torchvision.transforms.Resize()的使用并拓展到更多torchvision.transforms实例的用法,可以见此处。

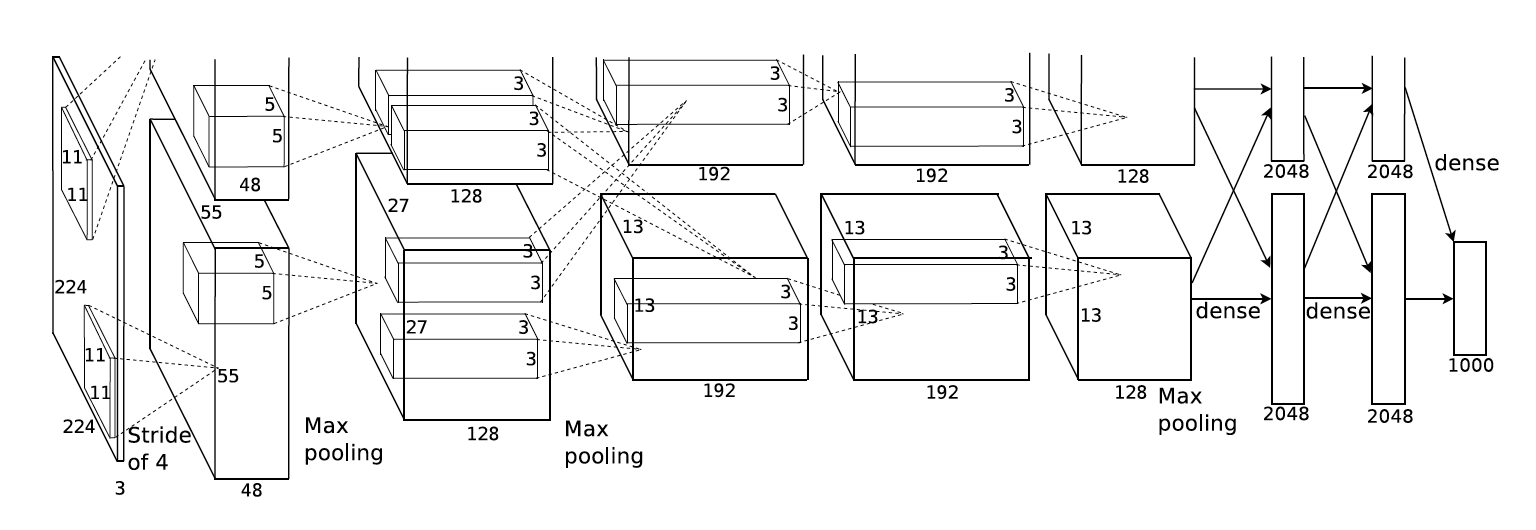

网络结构

模型建立

import time

import torch

from torch import nn, optim

import torchvision

import sys

sys.path.append("..")

import d2lzh_pytorch as d2l

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

class AlexNet(nn.Module):

def __init__(self):

super(AlexNet, self).__init__()

self.conv = nn.Sequential(

nn.Conv2d(1, 96, 11, 4), # in_channels, out_channels, kernel_size, stride, padding

nn.ReLU(),

nn.MaxPool2d(3, 2), # kernel_size, stride

# 减小卷积窗口,使用填充为 2 来使得输入与输出的高和宽一致,且增大输出通道数

nn.Conv2d(96, 256, 5, 1, 2),

nn.ReLU(),

nn.MaxPool2d(3, 2),

# 连续 3 个卷积层,且使用更小的卷积窗口。除了最后的卷积层外,进一步增大了输出通道数。

# 前两个卷积层后不使用池化层来减小输入的高和宽

nn.Conv2d(256, 384, 3, 1, 1),

nn.ReLU(),

nn.Conv2d(384, 384, 3, 1, 1),

nn.ReLU(),

nn.Conv2d(384, 256, 3, 1, 1),

nn.ReLU(),

nn.MaxPool2d(3, 2)

)

# 这里全连接层的输出个数比 LeNet 中的大数倍。使用丢弃层来缓解过拟合

self.fc = nn.Sequential(

nn.Linear(256*5*5, 4096),

nn.ReLU(),

nn.Dropout(0.5),

nn.Linear(4096, 4096),

nn.ReLU(),

nn.Dropout(0.5),

# 输出层。由于这里使用 Fashion-MNIST,所以用类别数为 10,而非论文中的 1000

nn.Linear(4096, 10),

)

def forward(self, img):

feature = self.conv(img)

output = self.fc(feature.view(img.shape[0], -1))

return output读取数据

为了方便起见,这里不采用 ImageNet 数据集,而是采用较为简单的FashionMNIST数据集,所以需要通过图像增广把图像扩大到 224 的长宽。这里的图像增广需要用torchvision.transforms提供的Resize()实例来实现,并且利用Compose实例与ToTensor()转换器串联,最终读取数据的程序可以优化为:

def load_data_FashionMNIST(batch_size, resize, root="~/Datasets/FashionMNIST"):

trans = [

torchvision.transforms.ToTensor(),

torchvision.transforms.Resize(size=resize),

]

trans = torchvision.transforms.Compose(trans)

train_mnist = torchvision.datasets.FashionMNIST(

root=root, train=True, transform=trans, download=False

)

test_mnist = torchvision.datasets.FashionMNIST(

root=root, train=False, transform=trans, download=False

)

train_iter = torch.utils.data.DataLoader(

train_mnist, batch_size=batch_size, shuffle=True, num_workers=4

)

test_iter = torch.utils.data.DataLoader(

test_mnist, batch_size=batch_size, shuffle=False, num_workers=4

)

return train_iter, test_iter训练模型

main函数

net = AlexNet()

batch_size = 128

resize = 224

lr = 0.001

num_epochs = 5

device = torch.device("cuda")

train_iter, test_iter = load_data_FashionMNIST(batch_size=batch_size, resize=resize)

optimizer = torch.optim.Adam(net.parameters(), lr=lr)

train(net, train_iter, test_iter, optimizer, device, num_epochs)